The Stickatron, the Elastiphone and the Perceptron: three co-played entangled instruments

Steve Symons

Click here for the video presentation

Abstract:

Click here for the video presentation

Emerging research in the field of digital musical instruments (DMIs) has drawn on entanglement ideas from post-human theories as a way to discuss areas such as open mixer feedback musicianship [1, 2] and human-AI musical creativity [3]. Such research extends DMI research by moving from the idea of ‘playing’, as in controlling an instrument, to an experience where the human player and the system mutually engage. Little research, however, has considered applying entanglement to two or more players collaborating within a single musical system.

I propose to present a series of 3 instruments that are part of a wider practice of applying post-human ideas of entanglement [4] and apparatus [5], alongside participatory sense-making ([6]) to shared musical experiences. We can see elements of these paradigms appearing in several existing multi-player projects. Such as SensorBand’s SoundNet [7], where a network of interconnected climbing ropes (mapped and sonified via tension sensors), enmesh players’ individual movements. While Fels directly couples players with the Tooka [8]; here a constant input of air from two participants onto a shared pressure sensor ensures that any sound is co-produced. TONETABLE [9] entangles individual contribution by creating an interactive environment facilitated through a fluid dynamics simulation. Players don’t interact directly but through affecting the simulated water.

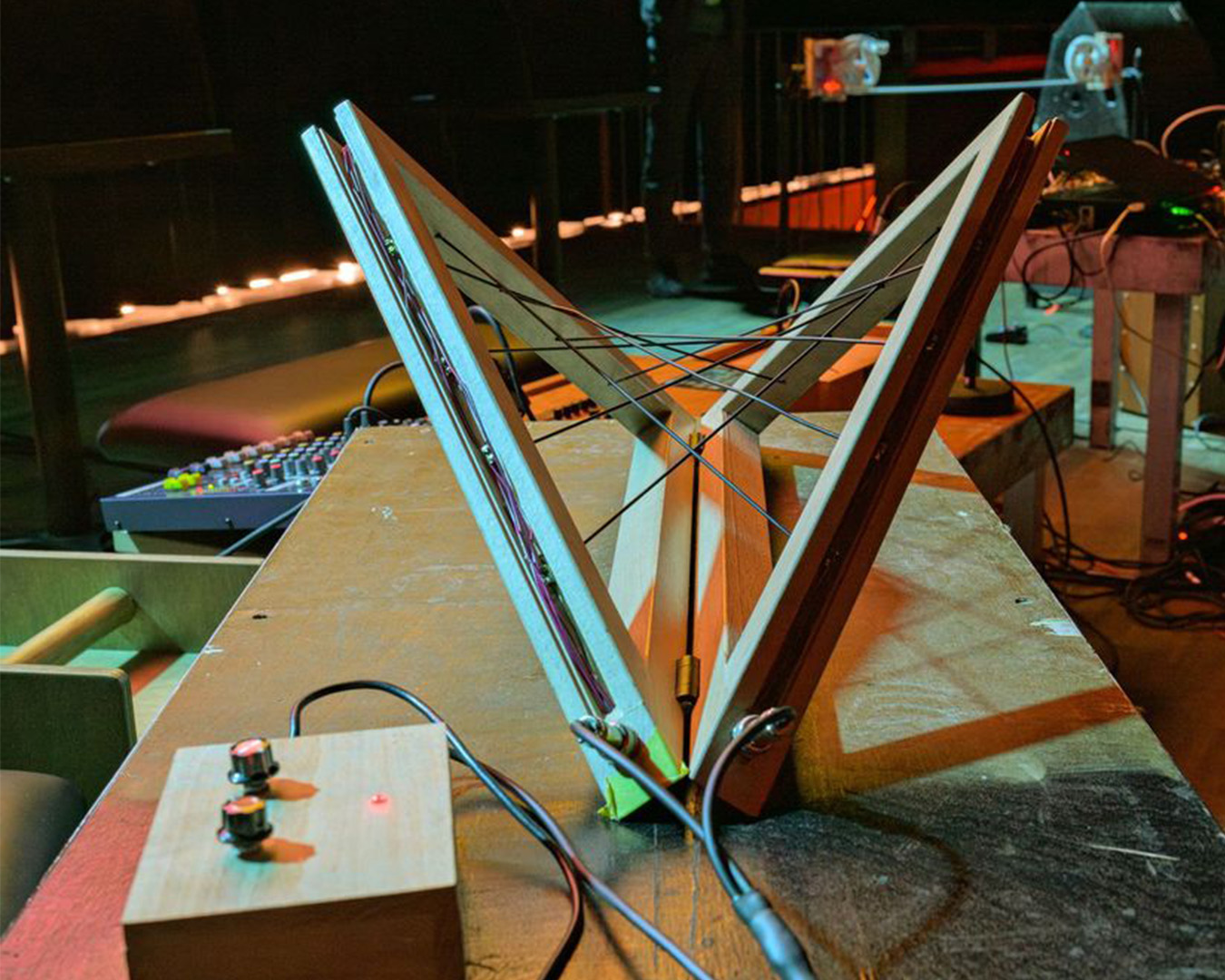

The Stickatron, the Elastiphone and the Perceptron are being developed as part of a series of studies that explores how entangled instruments can enable enjoyable, embodied experiences and intimate connections between people as they are played.

The Stickatron is played by players holding one handle each of the interface and, together, they lift then move the stick in space to explore a synthesised world. Pitch (vertical angle) and yaw (horizontal rotation) both have an effect, while the position of the stick’s mid-point in the instrument space (a 1.5m sided cube centered on the sensing GameTrak) acts as a ’3 dimensional playing cursor’.

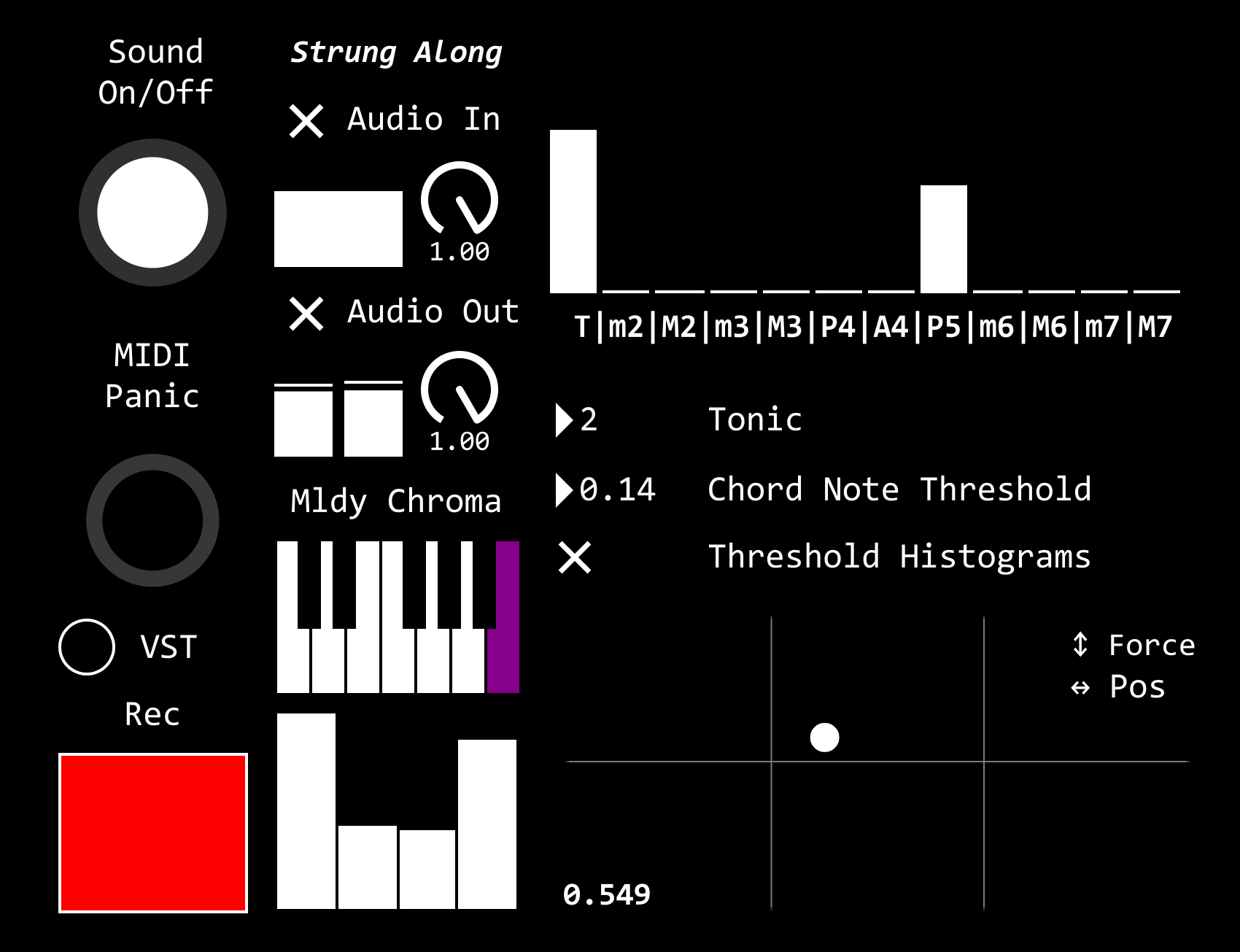

The Elastiphone replaces control of the synthesis system with a virtual elastic band between the players hands. The same data is extracted as for the Stickatron, but with the addition of the separation between the players’ hands. Like the Stickatron, VCV Rack is used for audio synthesis.

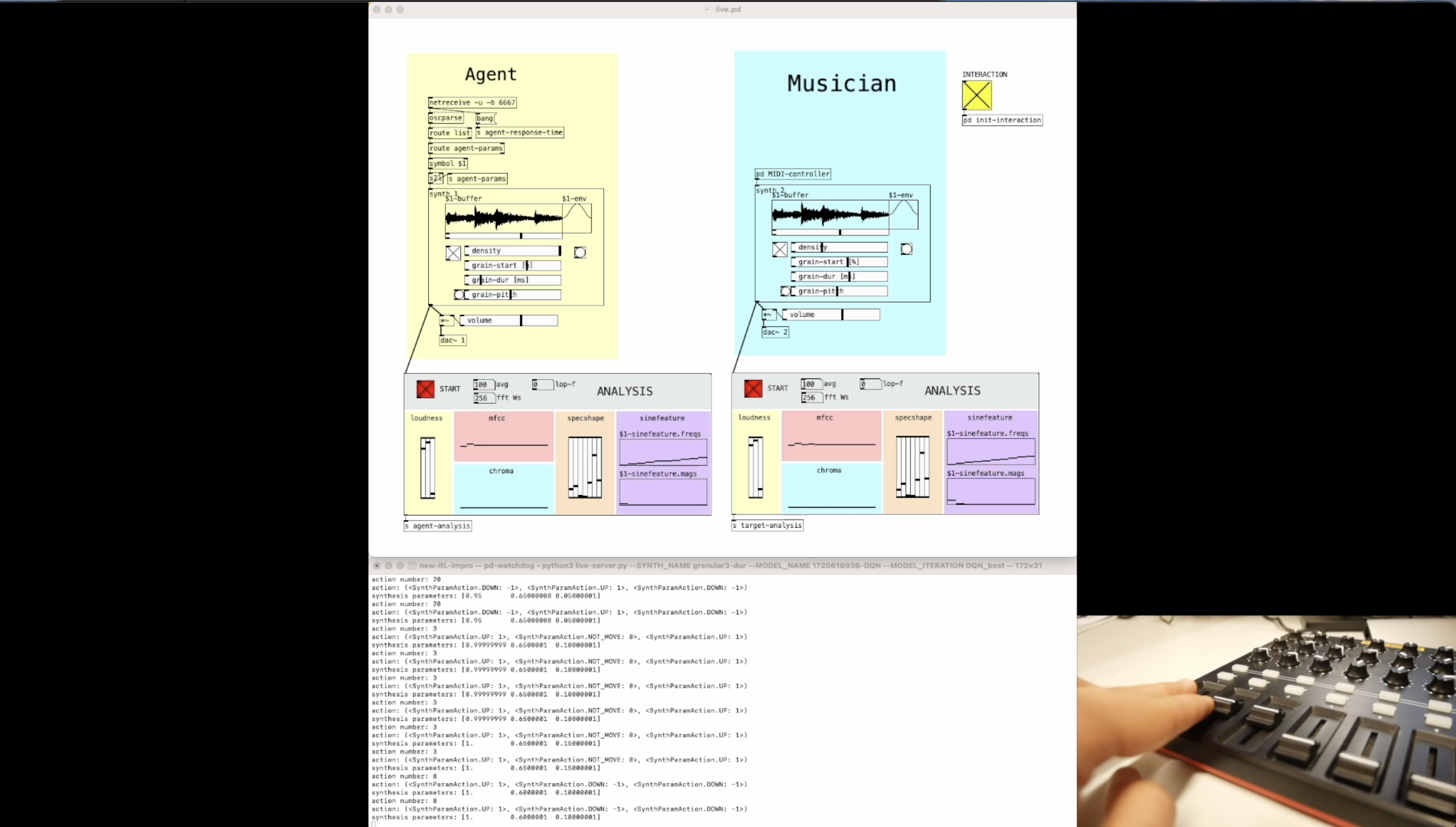

The Perceptron uses machine learning (a multi-layer perceptron) to combine the players' movements and to generate control data for a second machine learning model to create audio in real time [10].